Services, Load Balancing, and Networking

Service

from Service

An abstract way to expose an application running on a set of Pods as a network service.

Kubernetes Pods are mortal. They are born and when they die, they are not resurrected. If you use a Deployment to run your app, it can create and destroy Pods dynamically.

Each Pod gets its own IP address, however in a Deployment, the set of Pods running in one moment in time could be different from the set of Pods running that application a moment later.

In Kubernetes, a Service is an abstraction which defines a logical set of Pods and a policy by which to access them. The set of Pods targeted by a Service is usually determined by a selector.

Defining a Service:

apiVersion: v1

kind: Service

metadata:

name: my-service

spec:

selector:

app: MyApp

ports:

- protocol: TCP

port: 80

targetPort: 9376 # Pods listen on TCP port 9376

Kubernetes assigns this Service an IP address (sometimes called the “cluster IP”), which is used by the Service proxies.

The controller for the Service selector continuously scans for Pods that match its selector.

The default protocol for Services is TCP.

As many Services need to expose more than one port, Kubernetes supports multiple port definitions on a Service object.

Virtual IPs and service proxies

very node in a Kubernetes cluster runs a kube-proxy. kube-proxy is responsible for implementing a form of virtual IP for Services of type other than ExternalName.

By default, kube-proxy in userspace mode chooses a backend via a round-robin algorithm.

IPVS proxy mode:

- rr: round-robin

- lc: least connection (smallest number of open connections)

- dh: destination hashing

- sh: source hashing

- sed: shortest expected delay

- nq: never queue

Multi-Port Services

For some Services, you need to expose more than one port.

apiVersion: v1

kind: Service

metadata:

name: my-service

spec:

selector:

app: MyApp

ports:

- name: http

protocol: TCP

port: 80

targetPort: 9376

- name: https

protocol: TCP

port: 443

targetPort: 9377

DNS

You can (and almost always should) set up a DNS service for your Kubernetes cluster using an add-on.

Headless Services

Sometimes you don’t need load-balancing and a single Service IP. In this case, you can create what are termed “headless” Services, by explicitly specifying “None” for the cluster IP (.spec.clusterIP).

For headless Services, a cluster IP is not allocated, kube-proxy does not handle these Services, and there is no load balancing or proxying done by the platform for them.

Publishing Services (ServiceTypes)

For some parts of your application (for example, frontends) you may want to expose a Service onto an external IP address, that’s outside of your cluster.

Kubernetes ServiceTypes allow you to specify what kind of Service you want. The default is ClusterIP.

Ingress:

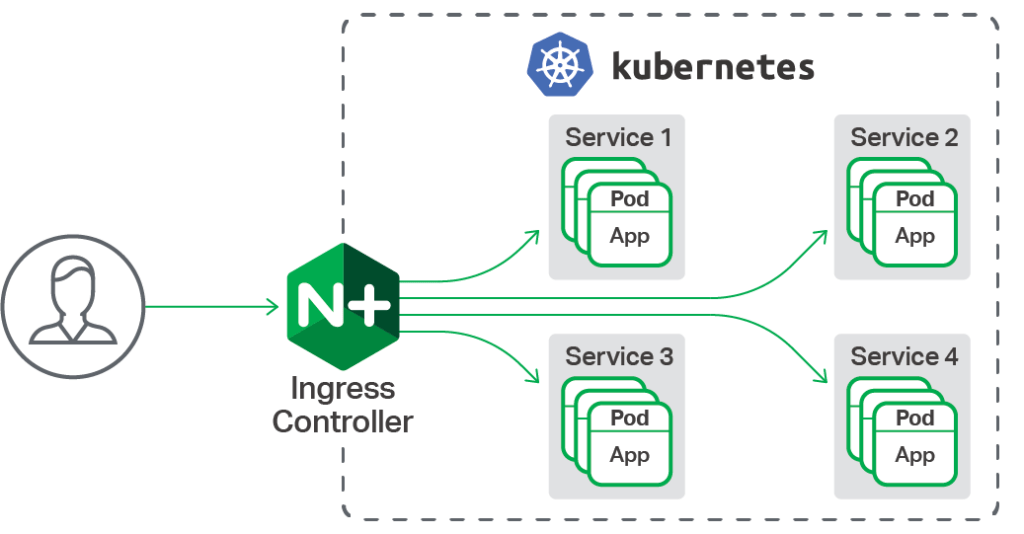

You can also use Ingress to expose your Service. Ingress is not a Service type, but it acts as the entry point for your cluster. It lets you consolidate your routing rules into a single resource as it can expose multiple services under the same IP address.

DNS for Services and Pods

from DNS for Services and Pods

Every Service defined in the cluster (including the DNS server itself) is assigned a DNS name. By default, a client Pod’s DNS search list will include the Pod’s own namespace and the cluster’s default domain.

Connecting Applications with Services

from Connecting Applications with Services

By default, Docker uses host-private networking, so containers can talk to other containers only if they are on the same machine. In order for Docker containers to communicate across nodes, there must be allocated ports on the machine’s own IP address, which are then forwarded or proxied to the containers.

Ingress

from Ingress

What is Ingress?

Ingress exposes HTTP and HTTPS routes from outside the cluster to services within the cluster. Traffic routing is controlled by rules defined on the Ingress resource.

internet

|

[ Ingress ]

--|-----|--

[ Services ]

An API object that manages external access to the services in a cluster, typically HTTP.

Ingress can provide load balancing, SSL termination and name-based virtual hosting.

- Node: A worker machine in Kubernetes, part of a cluster.

- Cluster: A set of Nodes that run containerized applications managed by Kubernetes. For this example, and in most common Kubernetes deployments, nodes in the cluster are not part of the public internet.

- Edge router: A router that enforces the firewall policy for your cluster. This could be a gateway managed by a cloud provider or a physical piece of hardware.

- Cluster network: A set of links, logical or physical, that facilitate communication within a cluster according to the Kubernetes networking model.

- Service: A Kubernetes Service that identifies a set of Pods using label selectors. Unless mentioned otherwise, Services are assumed to have virtual IPs only routable within the cluster network.

Prerequisites

You must have an ingress controller to satisfy an Ingress. Only creating an Ingress resource has no effect.

You may need to deploy an Ingress controller such as ingress-nginx. You can choose from a number of Ingress controllers.

An Ingress with no rules sends all traffic to a single default backend.

Single Service Ingress:

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: test-ingress

spec:

backend:

serviceName: testsvc

servicePort: 80

Simple fanout

foo.bar.com -> 178.91.123.132 -> / foo service1:4200

/ bar service2:8080

would require an Ingress such as:

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: simple-fanout-example

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- host: foo.bar.com

http:

paths:

- path: /foo

backend:

serviceName: service1

servicePort: 4200

- path: /bar

backend:

serviceName: service2

servicePort: 8080

Name based virtual hosting

Name-based virtual hosts support routing HTTP traffic to multiple host names at the same IP address.

foo.bar.com --| |-> foo.bar.com service1:80

| 178.91.123.132 |

bar.foo.com --| |-> bar.foo.com service2:80

The following Ingress:

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: name-virtual-host-ingress

spec:

rules:

- host: foo.bar.com

http:

paths:

- backend:

serviceName: service1

servicePort: 80

- host: bar.foo.com

http:

paths:

- backend:

serviceName: service2

servicePort: 80

For example, the following Ingress resource will route traffic requested for first.bar.com to service1, second.foo.com to service2, and any traffic to the IP address without a hostname defined in request (that is, without a request header being presented) to service3.

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: name-virtual-host-ingress

spec:

rules:

- host: first.bar.com

http:

paths:

- backend:

serviceName: service1

servicePort: 80

- host: second.foo.com

http:

paths:

- backend:

serviceName: service2

servicePort: 80

- http:

paths:

- backend:

serviceName: service3

servicePort: 80

TLS

You can secure an Ingress by specifying a Secret that contains a TLS private key and certificate. Currently the Ingress only supports a single TLS port, 443, and assumes TLS termination.

Secret:

apiVersion: v1

kind: Secret

metadata:

name: testsecret-tls

namespace: default

data:

tls.crt: base64 encoded cert

tls.key: base64 encoded key

type: kubernetes.io/tls

Ingress:

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: tls-example-ingress

spec:

tls:

- hosts:

- sslexample.foo.com

secretName: testsecret-tls

rules:

- host: sslexample.foo.com

http:

paths:

- path: /

backend:

serviceName: service1

servicePort: 80

Ingress Controllers

from Ingress Controllers

In order for the Ingress resource to work, the cluster must have an ingress controller running.

Enterprise-grade application delivery for Kubernetes, from NGINX Ingress Controller for Kubernetes

Network Policies

from Network Policies

A network policy is a specification of how groups of pods are allowed to communicate with each other and other network endpoints.

NetworkPolicy resources use labels to select pods and define rules which specify what traffic is allowed to the selected pods.

Adding entries to Pod /etc/hosts with HostAliases

from Adding entries to Pod /etc/hosts with HostAliases

Adding entries to a Pod’s /etc/hosts file provides Pod-level override of hostname resolution when DNS and other options are not applicable